Archeology, Politics, Red Flags

In this part, we cover one of the approaches for modernization and possible caveats.

Legacy systems usually have a monolithic structure with several layers of business logic added throughout the years of operation. And the microservice architecture is a modern response to the old lavish monolith. As there is no sense in replacing a monolith with another monolith, right?

Such a pattern generally implies "eating an elephant by pieces": step-by-step re-implementing the functionality to transition from an old to a new system. But do we need to bring the old mess to the new home? That is the critical question to answer.

You can't repeat the past

There are two undeniable statements:

- The new system can't do something exactly like the old one. You will not create the exact copy even if you try hard to achieve a 100% match. You will get a different system anyway.

- There is no sense for the new system to act exactly like the old one. You most likely need some help understanding what you are doing if replicating the full functionality.

For instance, the Legacy's Entity structure might be outdated and not match the current business domain. The entire data definition needs to be revised so that some entities will no longer possess the attributes they had, and some will no longer exist. So, the user and application interfaces are also the subject of complete revisions.

A Business Analyst is usually involved in what I call "Software archeology." The context between now and then shifted or was missed entirely. The responsible people left or forgot many details. No one knows why some features or logic were added and if it is utilized now or ever. You can spend time investigating the reasoning and end up with nothing. In this case, there are two ways: bring that into the new system (just in case) or cut that functionality and see if that will hurt someone. The second option might be painful and consequential, so be cautious.

Deconstructing the Colossus

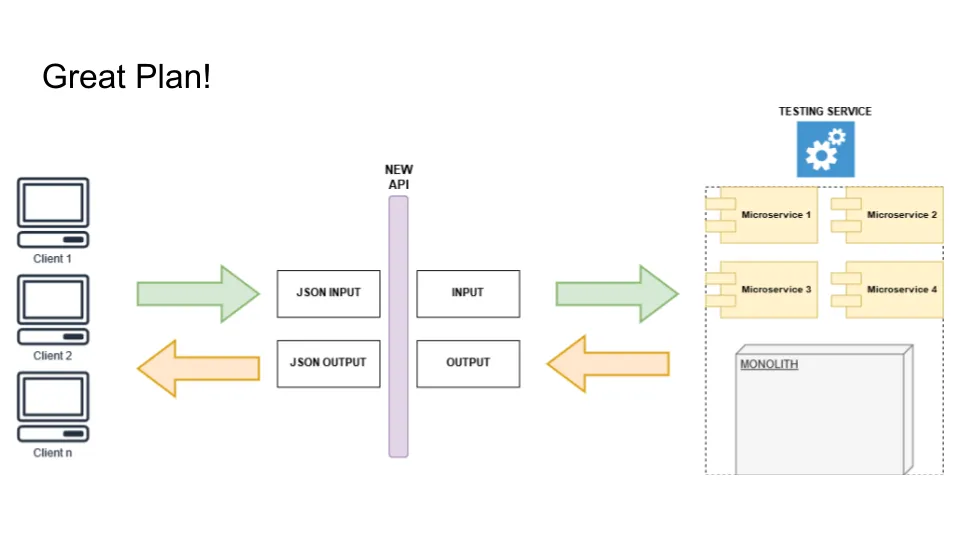

If we are talking about a backend legacy, which multiple consumers use via API, the best strategy is to:

- Wrap the Legacy with the new API layer (API Gateway), replicating the current payload and bringing the new data format or API type (e.g., XML to JSON, SOAP to RESTful API, etc).

- Make the data conversion happen in that new layer, so the Legacy still works with its data structure, but now it is detached from the consumers.

- Make consumers migrate to call the Gateway instead of the Legacy directly. Be persistent and, at some point, cut the possibility of calling it directly. However, there is a must-have condition: the data conversion should work ideally without any performance concerns.

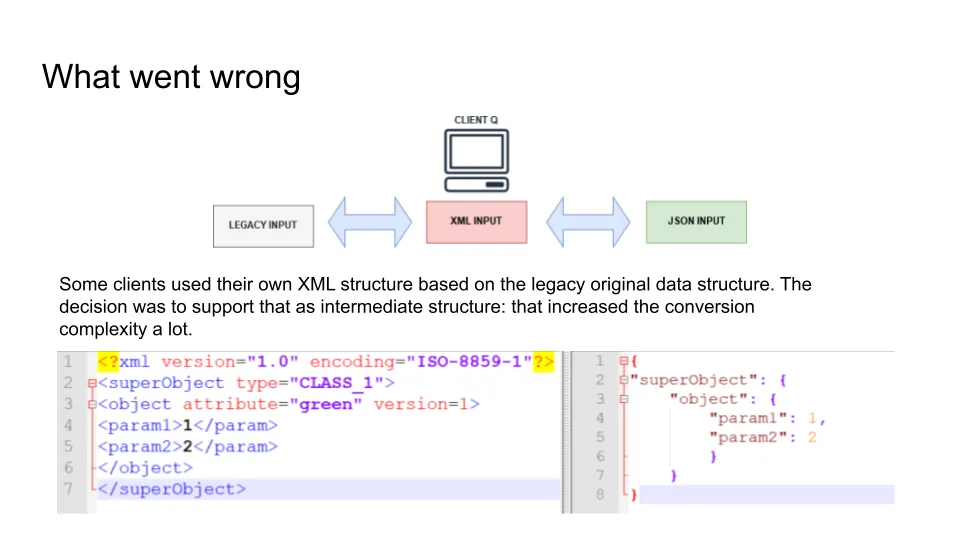

Data mapping and conversion is a massive topic on its own. It is challenging to manage the mapping of multiple huge entities between two formats. But if there is a three format, the conversion complexity doubles if not triples. Especially if you don't own all the structures.

The Team was working on the conversion between the legacy format and input/output JSON for Microservices API. But there was already a 3rd structure used by a Client with the existing conversion from Legacy to XML and vice versa. XML was not 100% equal to the Legacy structure and had unique data definitions.

So that looks like Legacy -> XML -> JSON and reverse JSON -> XML -> Legacy. Supporting that kind of conversion was hell for the Team. They did a direct conversion between Legacy and JSON. However, the Client did not agree to migrate, so the transformation was challenging to maintain because both structures were fluent and constantly changed by different teams.

The most important thing is to have a technical vision of how the Legacy will be decomposed. More than just a deck with a few fancy diagrams is required.

Assuming we have the API layer and conversion layers. Before we move to the actual decomposition, we need to have a testing strategy: how will we ensure that the new system does not regress compared to the monolith?

A solution is to have an orchestrator that calls the new and old systems and then compares the outcomes. API layer can handle the first, and the testing service can do the latter. That will allow you to track and address possible degradation of the client experience (and catch bugs).

Now that we have the API layer, conversion, and testing service, we can start cutting off monolith pieces and re-implement them as microservices. Doing it purely Agile without prior comprehensive system analysis can turn sour.

What is lying on the surface is the low-hanging fruit. However, moving further and deeper might change the previous design and make further decomposition much harder.

Let's talk about politics

Internal politics is also a reason why delivering the result soon is critical, not waiting until you finish the decomposition. If you have an intermediate result sufficient to cover some logic handled without monolith, you must push it to Production. First, it is a good (but painful) experience. Second, it is difficult to cancel a project that is already in Production and used by customers.

It is inevitable that some of the resources you had from the start will be drawn from you and reallocated to other business priorities. Or you will not be given enough resources constantly. So, from the beginning, there should be a releasing strategy. Not in a distant future, desirably.

The Engineering Manager pushed the Team to launch a few already decomposed microservices along with the "wrapped" monolith for a Client product on a significant market. Those services were quite raw, so that was a challenging release with much effort spent on support.

That seemed an unjustified effort, and the Team would instead focus on stabilization and further decomposition. But now I see that the manager was right, and it was necessary to do that release to demonstrate the progress and keep going.

There is a temptation to introduce some decomposed pieces as a new application with enhanced capabilities previously not seen in the monolith. I call them Sidekicks, and they can outshine the "parent" product and shift the main focus to chasing a quick profit. I covered that topic in a different essay available on my blog.

Such replacement is a money-consuming endeavor with no evident profits in the foreseeable future. It is a hard sell for the executive management. Having a new exited application is a trick to start chasing quick money instead of focusing the resources on the legacy replacement.

Red flags

To name a few signs when something goes wrong or will likely go wrong in the future:

- Legacy system components are owned by different teams without a single Leadership.

- The "Legacy" and the "Replacement" are different teams under different management.

- The source code of a Legacy is missed, or the Replacement Team cannot access it.

- Some Legacy documentation cannot be shared with the Replacement Team due to various concerns.

- Develop the Sidekicks (see above) in parallel.

- "Deal with it later" attitude toward the next steps.

- Frequent changes in the technology stack: frequent migration to different CI/CD tools, libraries, frameworks, and databases (e.g., MongoDB to PostgreSQL).

Closing thoughts to Part 2

We all know that the system exists only to satisfy the needs of higher systems (to simplify things, we call it "the Business"). And we also know that there are multiple ways to satisfy those needs. It is about making the right choice when answering the "how" question. People behind a Legacy system once made their choice. Time proved whether they were right about something or not.

People behind the new system need to make their own calculated choices based on the context and environment where an organization currently exists. They only need the required time, resources, competence, and dedication. Not so much, right?

In the end, here is my favorite picture on this topic: